I'm running Opera developer on Ubuntu version 23.10. I'm using Opera Developer version 111.0.5138.0.

Update stream:developer

System:Ubuntu 23.10 (x86_64; ubuntu:GNOME)

Chromium version:124.0.6356.6

Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/124.0.0.0 Safari/537.36 OPR/111.0.0.0 (Edition developer)

Install: /usr/lib/x86_64-linux-gnu/opera-developer

Profile: /home/XXXX/.config/opera-developer/Default

Cache: /home/XXXX/.cache/opera-developer/Default

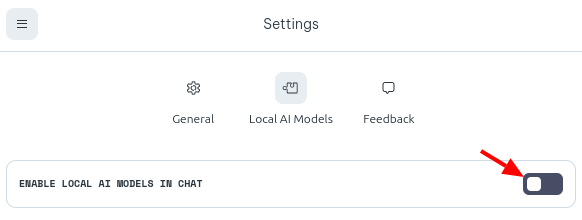

When I try to toggle on Local AI Models (here:

), nothing happens. I get these errors in the console:

Unchecked runtime.lastError: Event won't fit schema: 'feature_events.event_ai'

{

"action": "SettingChanged",

"context": "Sidebar",

"element": "AISetting",

"model": null,

"str_value": "local_model:enabled"

}

(Path: feature_events.event_ai.model

Expected: {

"name": "event_ai_RECORD_model_ENUM",

"symbols": [ "Unknown", "ChatGpt", "ChatSonic", "HelpChat", "ChatAlpha", "Aria" ],

"type": "enum"

}

Actual: [null] = 'null')

And:

LOCAL MODELS API -> LOCAL MODELS START ERROR: OLLAMA_PATH_NOT_VALID

(anonymous) @ aria.js:2

(anonymous) @ aria.js:2

(anonymous) @ aria.js:172

aria.js:2 aria-popup -> LOCAL MODELS ENABLE ERROR false

(anonymous) @ aria.js:2

(anonymous) @ aria.js:2

(anonymous) @ aria.js:172

p @ aria.js:2

(anonymous) @ aria.js:2

(anonymous) @ aria.js:2

Wt @ aria.js:2

s @ aria.js:2

Promise.then (async)

Wt @ aria.js:2

o @ aria.js:2

(anonymous) @ aria.js:2

(anonymous) @ aria.js:2

(anonymous) @ aria.js:172

setChecked @ aria.js:172

onChange @ aria.js:172

Le @ aria.js:2

Ge @ aria.js:2

(anonymous) @ aria.js:2

Fi @ aria.js:2

Bi @ aria.js:2

(anonymous) @ aria.js:2

xc @ aria.js:2

Ne @ aria.js:2

Vi @ aria.js:2

Qt @ aria.js:2

Ht @ aria.js:2

i @ aria.js:2

Does anyone know why the local models are not working?

I have node and pip on my system and have used gpt4all and ollama successfully before.

Thanks!